Academic Influence Releases Free Tool to Detect Ghostwriting

Recently, the website Academic Influence, best known for its custom college ranking tool, released a free tool that it says can help instructors detect ghostwritten content.

The new tool is entitled GhostDetect, and it works by analyzing a reference text that is known to be written by the author and a query text that is in question. The two works are then put through roughly a dozen different tests, each that produce quantifiable results that can be compared between each other.

The goal is not to provide any final judgment, but to present the user with enough data that they are able to quickly make a determination on their own.

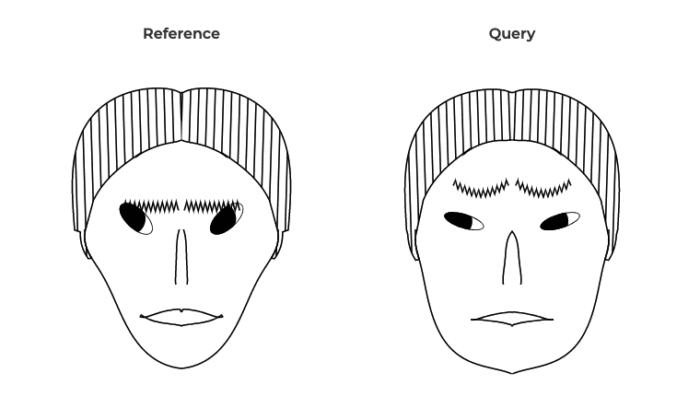

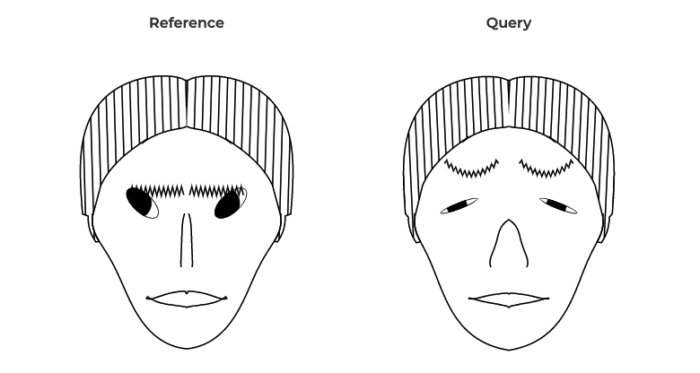

One of the more unique data points is the creation of Flury-Riedwyl faces. These faces combine the various data points that the tool found and presents them as a visual face. According to their explanation, this works well since humans are conditioned to quickly spot differences and similarities in faces, making such faces a quick way of comparing the work.

But how well does the tool work? I decided to take it for a test drive using some of my own content and find out for myself.

Testing the Tool

To test the tool out, I first wanted a baseline. To that end, I took my two most recent full articles on the site, the one about Activision plagiarizing an upcoming character’s design and the challenges of determining podcast plagiarism.

Since the latter is the oldest, by a day, I opted to use that as the reference. What I found was interesting.

First, I noticed that, while the Flury-Riedwyl faces for the two documents were somewhat similar, they were still notably different.

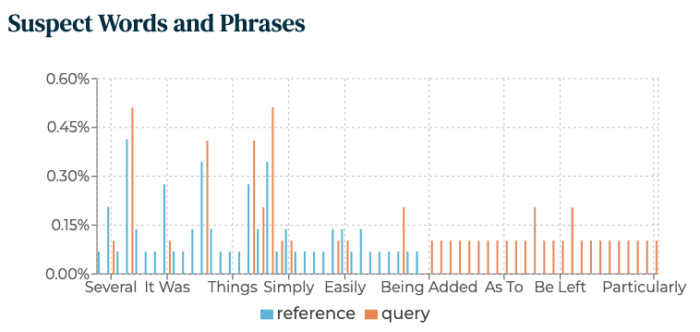

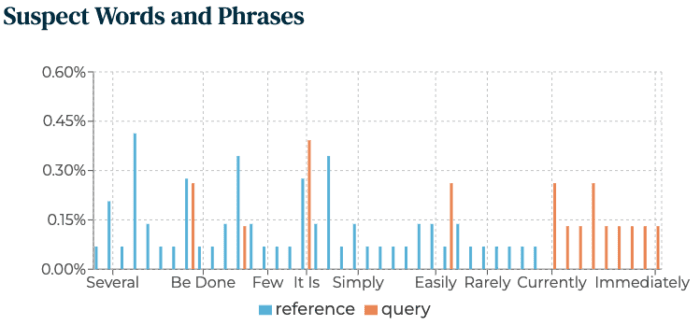

Diving into the numerical data, it was apparent that the two pieces were both about equally difficult to read from a grade level standpoint, but that the latter had a significantly higher average sentence length, 21.71 to 18.16. Also interesting, the query document had a large number of “suspect words” that were not in the original.

Despite the notable differences, the two documents were still fairly similar in all areas. So, with that in mind, I kept the reference document the same and, for the query document, use this one by reporter Marie Woolf that was published in the Toronto Star.

The faces didn’t, at first glance, appear drastically more different than the first comparison. Though there are key differences, there are also similarities. The two documents also shared similar grade levels and had a similar difference in sentence length.

What was actually most telling was the “Suspect Words”, which did show a clear difference between the two documents.

So while the tool did point out to significant differences between the two works, one had to pay close attention to the data to spot differences that weren’t just normal variations for the same author.

However, this raises a bigger question: What would one do with this information?

Applying the Findings

The ideal use case for this tool would be that an instructor would notice a change in writing style for a student and use the tool to quantify how the new work is different from their previous one and prove that the new one was ghostwritten.

However, that doesn’t actually work.

The problem is simple, while this tool highlights quantifiable differences between two documents, it can’t prove that the documents were written by two different people. Though individuals generally have a writing style, that style can change a great deal from work to work.

For example, works on different subjects, works where different amounts of time were spent or works where different editors were available could produce radically different scores. However, in those cases, authorship would still be the same.

This is especially true in schools where students are learning and growing as writers. If a student puts no effort into one essay but works diligently on another, including seeking editorial help, it could produce different radically results.

So, while GhostDetect can certainly point to interesting and useful similarities and differences between two texts, it can’t prove authorship. Other tools, such as the ones used by Turnitin and Unicheck require many thousands of words of baseline text to compare any new works against. Even with that effort, such results are rarely final.

What GhostDetect does is incredibly smart if limited. It avoids using artificial intelligence or closed algorithms and, instead, processes the work using a dozen or so known analysis tools and present those findings side-by-side and in one place.

To be clear, this is extremely useful. But it’s difficult to see how this could serve as definitive proof of ghostwriting or contact cheating without additional information.

Bottom Line

GhostDetect is an incredibly interesting and potentially useful tool. It collects a wide variety of quantifiable data about two works and makes it easy to compare them. In addition to helping detect possible ghostwriting, it can also be a great educational tool, letting students or authors see how similar (or dissimilar) their work is to others.

However, as a tool for detecting and proving ghostwriting, it seems a bit more dubious. Given that individuals can have dramatic shifts in their writing style, especially when they are learning, the differences indicated do not necessarily prove a difference in authorship. Simply that one is likely.

Still, a new tool and, in particular a free tool, in this fight should not be taken lightly. This is especially true since it produces quantifiable results.

This may not be a game changer when it comes to stopping contract cheating in schools, but it is still progress, and it is still helpful, which is precisely why I wanted to highlight it here.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.