Bad Bots, Your Site and Your Content

If you’re like most webmasters, you probably think the bulk of your traffic comes from human beings visiting your site. However, study after study has shown that’s not the case, with well over half of all traffic coming from automated bots.

If you’re like most webmasters, you probably think the bulk of your traffic comes from human beings visiting your site. However, study after study has shown that’s not the case, with well over half of all traffic coming from automated bots.

Distil Networks has made a business out of studying these bots and helping webmasters filter out the ones they don’t want on their sites.

This gives Distil a great deal of information as to what is going on with bad bots and how the landscape is changing as time goes on. Once a year, Distil encapsulates that information into a “Bad Bot Landscape Report” with the the 2015 edition, which looks back to 2014, having been released earlier this month.

The report highlights not only the shifts in the “bad bot” landscape, but also the hazards of bad bots and the challenges webmasters face in fighting them.

Defining a Bad Bot

The definition of a bad bot is difficult because what some webmasters consider a bad bot others might consider a good one.

The definition of a bad bot is difficult because what some webmasters consider a bad bot others might consider a good one.

In many ways, it’s easier to define a good bot, which is primarily any bot that the webmaster wants to allow on their site (even if they don’t actively realize it). These include search engines, which spider content on the Web regularly, and other bots that benefit webmasters.

Where good bots (usually) help a website, bad bots are out to hurt it. Exactly how depends on the bot. They can include content scrapers, which grab content from sites often for republication elsewhere, comment/post spammers and bots engaging in click fraud. To make matters worse, bad bots are also resonsible for a large number of the site hacks, including both vulnerability scans and attempts to brute force passwords.

Even if bots don’t directly harm your site, they can shutter it by sending it more traffic than it can handle. This happened to Plagiarism Today in 2013 when a botnet attacking WordPress sites tried unsuccessfully to hack into the site, but brought it down by overloading its server.

In short, the issue of bad bots affects every webmaster and, to that end, the Distil report comes with a dose of good news and a helping of very bad news.

The Good News

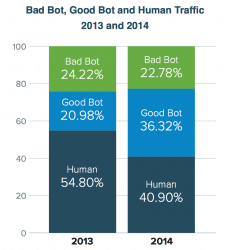

Right out of the gate, the report offers a reason to be optimistic. Namely that, looking at the sample that is Distil’s customers, bad bots make up a smaller percentage of Internet traffic than they did a year ago, dropping from 24.22% to 22.78%.

Right out of the gate, the report offers a reason to be optimistic. Namely that, looking at the sample that is Distil’s customers, bad bots make up a smaller percentage of Internet traffic than they did a year ago, dropping from 24.22% to 22.78%.

However, that drop isn’t necessarily a sign of fewer bad bots. Rather, the difference can be connected to a sharp increase in traffic from good bots, from 20.98% to 36.32%, which the report largely attributed to aggressive crawling by Bingbot (Bing’s search engine crawler) and other “upstart” search engines.

As such, while the percentage of traffic for bad bots went down, so did the percentage of traffic from human visitors, dropping from 54.80% to 40.90%.

In short, it’s likely that the amount of raw activity from bad bots stayed largely the same or grew slightly, but the growth was just drastically out paced by the rapid crawling of search engine spiders.

When looking at individual companies, there was also some great signs of progress as several providers made strides at reducing bot activity on their networks. Verizon, for example, was responsible for 10.99% of all bad bot traffic in 2013 but only 2.83% in 2014. The bad bot traffic from their network dropped from 68.24% of their total traffic to 36.75%, still high but a nearly 50% reduction.

Likewise, Level 3, a company that had 76.97% of its outbound traffic come from bad bots didn’t even make the list of top originators and Las Vegas NV Datacenter, which had 96.67% of its outbound traffic come from bad bots, also fell off the list.

However, it wasn’t all good news as Amazon, which has two entries on the list, made only small gains against bad bots in terms of outbound traffic but actually grew in the marketshare of bad bots.

Other companies including OVH SAS and CIK made their first appearance on the list.

Still, the numbers show that, with effort, real results can be achieved against the problem. But the report also shows that those results are going to be more and more difficult to achieve moving forward.

More Diverse and More Advanced

Taken as a whole, the Distil report shows that bad bots are growing more diverse and more complicated.

Taken as a whole, the Distil report shows that bad bots are growing more diverse and more complicated.

When looking at diversity, bad bots are becoming an increasingly global problem. Though the U.S. still hosts over half of all bad bots, likely driven by the prevalence of inexpensive hosting, However, more and more countries are becoming battlefields.

In 2013, just 8 countries had more than 1% share of all bad bot activity. In 2014, that number has grown to 14 with increased activity in countries like France, Italy, India and Israel.

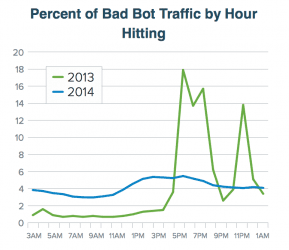

Also, bad bots are also becoming less predictable in their timing. In 2013, there were definite “peak” ties for bad bot activity where as much as 18% of all activity would take place during certain times of the day. However, in 2014, the curve has smoothed out with no obvious peaks and a steady stream of 3%-6% of all bad bot traffic taking place between each hour.

In short, the battle against bad bots is now a 24/7 global war.

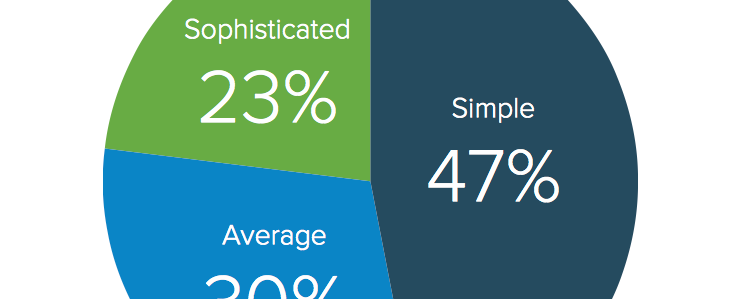

And the foes in that war are getting more sophisticated. Though Distil identifies 47% of all bad bots as being “simple”, meaning they are easily detected, 23% are considered “sophisticated” and 41% of all bots mimic human behavior to some degree.

Finally, bots are no longer limiting themselves to the desktop web. Nearly 5% of all bad bots identified themselves as “Android Webkit Browser” in an attempt to access the mobile versions of websites, which are easier to scrape and likely easier to attack. But where the U.S. was the leader in overall bad bot traffic, China and Chinese mobile services were the runaway leader in mobile bad bot activity.

All in all, bad bots are getting more sophisticated, more diverse geographically and are increasing their focus on the mobile web.

What Does This Mean

While the report does provide some clues as to the types of sites that see the highest amount of bad bot activity, showing that small sites and digital publishing have the highest bad bot traffic as a percentage of their total traffic, the truth is that all websites have very legitimate concerns over bad bots and no two sites are a like.

A blog, for example, is more concerned with content scraping and comment spam. An ecommerce sites, however, are also worried about content scraping, in particular price scraping, which can be used aid competitors.

Any site that has user accounts will likely be interested in brute force bots and all sites have an interest in bots that scan for vulnerabilities.

In short, while your site’s particular issue with bad bots is probably unique to it, there almost certainly is at least some reason to be worried.

So what can be done about it? Obviously Distil is promoting its own service, which in my experience is excellent, but it’s also not practical for many webmasters for various reasons.

For those, I offer the following suggestions:

- Keep on Top of Security: If you host your own site, stay on top of security and keep your software/plugins/themes up to date. Bad bots often try to exploit known security holes in these applications.

- Robust Passwords: Make sure your passwords are strong and, if possible, try to change your username to something that can’t be guessed. For example, this means changing away from the default “admin” in WordPress and changing usernames when possible.

- Consider Anti-Bot Plugins: Various plugins and extensions work to detect bad bots or at least block their worst actions, such as posting spam or scraping content. Find tools that work to block the bots you’re most worried about.

The truth is that no tool or approach is going to be able to block all bad bots. There will always be some that are impossible to detect, much like email spammers. However, with proper precautions, much of the problem can be blunted.

Bottom Line

When looking through this report, what I see is that the bot war is just beginning. While there has always been a game of cat and mouse between webmaster and bot developer, the rise in sophistication and diversification over the past year makes it clear that the war is starting to escalate.

Over the next year or two, we’re going to see bots get far more complex, far more diverse and far more effective. Part of this is because of the increased effort to battle bad bots, forcing bot developers to engineer better applications to stay alive. A lot of this is also competition between bots themselves as they strive to stay ahead of the competition and reach their goals faster and more completely than others.

As webmasters, you’re not just caught in the crossfire, you’re the targets and the prize. Whether it’s comment spam, content scraping or site hacking, your site is the goal of these bad bots.

Now is the time to understand the problem and brace for it. These challenges are not going to get any easier and even if the amount of traffic does slow, it’s going to be come more potent and more intelligent as it does so.

However, this is a war that can be won, but it’s going to take both vigilant webmasters and service providers cooperating in shutting bad bots down. Currently, we have some progress on both sides, but nowhere near the commitment needed to make serious progress in this battle.

(Disclosure: I use Distil on this site and provide testing and feedback in exchange for a free account.)

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.