Comparing Citations to Detect Plagiarism

Two years ago (almost to the day) I covered the work of three researchers who were working on finding ways to detect plagiarism in research by looking at and comparing citations.

Two years ago (almost to the day) I covered the work of three researchers who were working on finding ways to detect plagiarism in research by looking at and comparing citations.

The principle was fairly straightforward, even plagiarized research papers need to have citations and those citations often closely mirror the ones found in the source work they copied from.

According to the research published two years ago (PDF), the technique had separate strengths and weaknesses from text-based plagiarism detection. For example, where traditional text-based detection could not detect translated, heavily paraphrased or plagiarism of ideas, citation-based detection couldn’t detect mere copying, inappropriate paraphrasing or short passages of plagiarism.

However, since all of these issues are relevant to research ethics, the researchers presented the proposed citation-based plagiarism detection as a way to supplement rather than replace traditional text-based matching in research papers.

Two years ago, the research was, more or less, simply experimental and theoretical. Though they had tested the technique on a series of papers, there was no practical demonstration of it.

That is, until now.

Researchers Bela Gipp, Normal Meuschke, Corinna Breitinger, Mario Lipinski and Andreas Numberger (All of which are either from the Department of Statistics at University of California, Berkeley or the Department of Computer Science at Otto-von-Guericke-University Magdeburg) recently unveiled a new prototype plagiarism detection system, one that uses a hybrid of text matching and citation-based plagiarism detection to try and spot unethical research.

The results are definitely interesting.

How it Works

For the user, the new prototype service initially works very similarly to any other plagiarism detection system. The user uploads two files or selects two documents from the available databases.

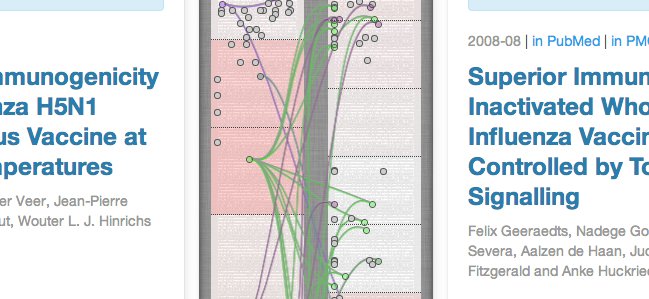

However, after you submit the works, you receive a very different interface. While it has a very familiary side-by-side comparison of the two documents, in the center there is a Web diagram that illustrates where the similar citations can be found.

In some cases involving very similar works, it can look like a tangled spiderweb.

In cases with very different works, however, it can look empty and vacant.

The prototype provides four different algorithms that can be used to compare the citations in the two papers.

- Bibliographic Coupling Strength (BC): The Bibliographic Coupling Strength score is an overall score indicating the number of citations in the two papers that are shared. Useful for an overall comparison of similarity, but not necessarily an indication of plagiarism.

- Citation Chunking (CC): Citation chunking looks at groups of citations that are similar, regardless of the order they are in and if there are non-matching citations in between. For example, if both papers cite the same six sources close together, this algorithm detects them regardless of order or extraneous citations.

- Greedy Citation Tiling (GCT): Greedy Citation Tiling looks at strings of citations that are presented in the same order. This is most useful at detecting paraphrased plagiarism where content was heavily rewritten but the citations remained in place.

- Longest Common Citation Sequence (LCCS): The Longest Common Citation Sequence algorithm looks at the longest number of citations that appear in both documents but in the same order, regardless of non-matching citations. The LCCS system identifies only one string (the longest) in each comparison.

While the BC tool is valuable for getting an overall feel of the similarity of the two papers, it’s the CC and GCT algorithms that really pull out and identify suspicious sections of the paper. The LCCS algorithm can identify the most worrisome aspect of the suspect paper, pointing to a string of citations that is both matching and in the same order throughout the paper.

But how well does the system work to detect plagiarism? Unfortunately, I wasn’t able to give it a full test due to both time and technical issues, but I do have some thoughts to share after spending a day with it.

A New But Potentially Effective Approach

I used the system for much of the day and tested out a combination of the sample papers they gave me and random papers in the databases they can search. The tool worked remarkably well and was able to reasonably quickly detect the similarities between papers and provide clues at how likely that the similarities were more than coincidence.

That being said, I was not able to test it with two papers of my own. I tried to use it to analyze two dissertations I had performed an analysis on previously and, after uploading the works, I received an error message. Whether it was a size issue or a problem with the PDFs, it didn’t seem that the checker could parse it.

My other concern was that, even though the tool was very well organized, there is still a steep learning curve. Even for someone such as myself who does plagiarism analyses regularly, it took a bit of time to get myself oriented and learn how to use the tool.

Also, unlike text matching, which pretty plainly lays out what is and is not copied, citation plagiarism detection requires a great deal more human effort. After all, there’s a much higher risk of false positives as papers that are different, but within the same niche, may have large amounts of overlapping sources simply by their nature.

Another limitation is that there is no way with this system to simply verify the originality of a submitted work. While there is a feature that finds similar papers when you load two documents from the databases, you can’t start with a questionable document and find likely matches. Also, that feature appears to hone more in the BC score than other traits, meaning it often times finds papers that are merely similar, not necessarily plagiarized.

Still, this prototype can be potentially very useful. Not only does it take a lot of the work out of doing a citation comparison, but it can help detect plagiarism that otherwise would go unnoticed.

This is a novel approach that can detect types of plagiarism that might otherwise fall through the cracks and it is a natural supplement to text-based detection.

(Note: Since I wasn’t able to load my two papers, I can’t say how effective the text matching is in the prototype.)

All in all, I’m very excited about the possibilities that this research brings forth and hopeful that the research is able to progress and the protype is able to be improved even further.

Bottom Line

In the end, this prototype shows a great deal of promise in terms of providing a new way to detect plagiarism (and other unethical behavior) in research.

While it is not a substitute for text-matching plagiarism detection, something the researchers openly acknowledge, it is potentially a powerful tool to use in conjunction with it.

It would be interesting to see what could happen if citation plagiarism were integrated with text-matching plagiarism. In addition to getting a report on the other works that have high textual similarity, one could also get a list of the works with high citation similarity as well.

When it’s all said and done, this tool has the potential to help detect and deter more types of plagiarism and unethical behavior and that, in the long run, will encourage researchers to create and publish better work.

That, in turn, can help science move forward and make the world just a little bit better for everyone.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.