PlagScan Review: Solid Plagiarism Detection

PlagScan is a plagiarism checker that certainly has its share of fans. In Dr. Weber-Wulff’s most recent round of plagairism tests, PlagScan was listed as “partially useful”, the highest honor those tests awarded. Overall, PlagScan placed fourth.

PlagScan is a plagiarism checker that certainly has its share of fans. In Dr. Weber-Wulff’s most recent round of plagairism tests, PlagScan was listed as “partially useful”, the highest honor those tests awarded. Overall, PlagScan placed fourth.

That placed it well above better-known services, including both Copyscape and Plagium, both of which are more widely used by webmasters wanting to track their content.

Also, PlagScan has earned the trust of dozens of academic institutions and businesses, most of which are in the company’s native Germany.

However, Dr. Weber-Wulff’s tests were aimed at an education environment. The question remains, how well does it test when it comes to protecting web-based content from infringement? I was recently approached by Markus Goldbach, PlagScan’s CEO, who asked me to do such a test.

So, I decided to put PlagScan through a brief test to find out how effective it was for this particular usage case.

What is PlagScan

PlagScan originally saw life as SeeSources, a free plagiarism detection tool that focused on language patterns in plagiarism detection. However, to continue development, the people behind it took the product commercial and created PlagScan, which operates now as a professional and academic plagiarism detection service.

PlagScan originally saw life as SeeSources, a free plagiarism detection tool that focused on language patterns in plagiarism detection. However, to continue development, the people behind it took the product commercial and created PlagScan, which operates now as a professional and academic plagiarism detection service.

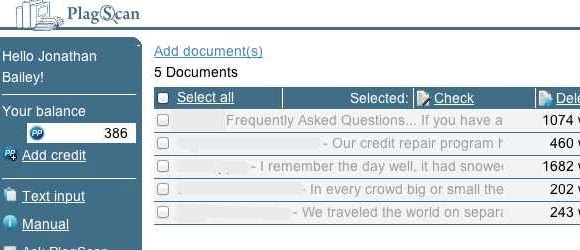

Plagscan works on the Yahoo!BoSS-API, the same API many similar services are built upon, and works by either uploading a document or by copying and pasting the text you want to check. PlagScan then analyzes the text involved and then returns the results in an email that includes PDF, plain text and docx formatted results. The results are also stored in your account.

The question is how well does the system work? To find out, I decided to test five different documents in PlagScan (using the copy and paste method) and see what the results were.

The Test Results

To test PlagScan, I decided to pit against two more of the more common tools webmasters use to track text, CopyScape and Plagium. I had all three services analyze five separate and structurally different works, each with differing amounts of known plagiarism to see how many results the services would uncover and how relevant those results would be.

Note: For all tests, I used the most “Pro” feature, available, including a pro account in Copyscape and “Deep Scan” in Plagium. I did not include matches in PlagScan that the system considered to be to low to be unoriginal. In each case, I used the text upload feature for consistency.

Test 1 – Poem (Medium Reuse)

For the first test, I ran through an old poem of mine (243 words) that i knew still had a large number of copies on the Web, both legitimate and plagiarized.

- PlagScan: 20

- Copyscape: 10

- Plagium: 4

The results here are pretty striking. Plagium flat out found more sources. That being said, there was some source duplication and, once you eliminate the confirmed dupes, as well as a small number of false positives, there are still several pages that PlagScan found that Copyscape did not.

That being said, Copyscape did find most of the critical domains, if not all of them. But was still outmatched in this test.

Test 2 – Prose Piece (Low Reuse)

For the second test, I ran through an old short essay of mine (202 words) that I knew had seen only limited reuse.

- PlagScan: 2

- Plagium: 1

- Copyscape: 0

In this test, PlagScan managed to detect both the original work and one plagiarism of it. Plagium managed to find the original and Copyscape reported it as clean.

In short, PlagScan found a plagiarist of this work that two others did not.

Test 3 – Short Story Piece (Low Reuse)

For the third test, I used an old short story of mine that (1682 words), as with the prose, saw only limited reuse.

- PlagScan: 18*

- Plagium: 1

- Copyscape: 0

Once again, Copyscape reported the work as being clean and Plagium only found the original. However, this time PlagScan found some 18 matches. However, most of those matches were for very short sections of the work and all except one, the original, were not actually copies.

This was a clear case of PlagScan returning a large number of false positives, though it did alert me to one or two pieces that might be considered of interest.

Test 4 – Marketing Copy (High Reuse)

For the fourth test, I decided to run through a client of mine’s marketing copy (460 words) that had seen moderate reuse and plagiarism over the past few months. The goal was to test how up to date the systems were with their databases.

- Copyscape: 39

- PlagScan: 23

- Plagium: 11

PlagScan lost this one, finding only 23 results to CopyScape’s 39. However, both sites suffered from a false positive problem as, toward the bottom of both results, several sites were listed even though they only had a small handful of words in common.

That being said, Copyscape seemed to have slightly fewer false positives in this test, making it the clear winner.

Test 5 – Information Copy (High Reuse)

Finally, a test to look at one of the more commonly plagiarized pieces of text I work with, an informational piace (1074 words) widely lifted by competitors. As such.

- Copyscape: 39

- PlagScan: 25

- Plagium: 11

Once again, Copyscape found more matches though, this time, both were plagued very badly by false positives and listed many results that weren’t actually matches. Still, Copyscape seemed to fair a little bit better with accuracy of results and, at the same time, turn out better quality results.

All in all, this one is another win for Copyscape.

Beyond the Numbers

Numerically, this is a very impressive showing for PlagScan as it either beat or held its own against much better-known competitors. However, this accuracy comes at a cost, both financially and time-wise.

Fist, PlagScan does cost a good deal more than CopyScape. Copyscape, for example, charges 5 cents a search (up to 2,000 words), meaning this test cost me a mere 25 cents. PlagScan, on the other hand, charges one credit per 100 words. At its cheapest, a credit costs about 1.1 cents. If you do more than 500 words in a search, Copyscape will always be cheaper (barring a different plan).

All in all, I spent 39 credits on this test, which at its cheapest would be just shy of 43 cents. Still not prohibitively expensive, but worth noting, especially for those with much larger projects.

The bigger drawback to PlagScan is the system itself. First, results with PlagScan take far longer than with either Copyscape or Plagium. With every test I would start PlagScan and then perform the test with the others and have the other two results back before PlagScan was finished. It was by no means torturously slow, taking no more than a couple of minutes on the longest test, but that time can add up if you’re testing a lot of work.

Finally, PlagScan, from a user interface standpoint, is definitely more geared to detecting the authenticity of an unknown work than to finding plagiarism of a known original online. The reports deal more with how much plagiarism was detected and lack features such as case management or case prioritization. There’s also no way to automatically monitor for plagiarism, as with both Copyscape’s Copysentry and Plagium’s alerts system.

In the end, though PlagScan definitely does a great job in finding matches, possibly a better job than Copyscape in many cases, using it will be unwieldily for most webmasters, bloggers, authors and other content creators.

That doesn’t mean that it isn’t worthwhile, it definitely is, just that its use may be somewhat limited.

Bottom Line

In the end, PlagScan fared very well in these tests. It competed well with Copyscape, both of which, numerically at least, trounced Plagium. That being said, Plagium was nearly immune to the false positives issue that plagued both Copyscape and PlagScan, making it more accurate, though probably not the most complete.

Clearly though, PlagScan did better with some kinds of works than others. However, this is typical with plagiarism checkers. I wouldn’t recommend you rely on it, or any other plagiarism checker, as your sole tool. Not only would you likely be missing results, but its setup would make that unweidly.

That being said, it’s definitely a new tool to put in the metaphorical toolbox, something to use to support your plagiarism detection efforts. It might not replace anything else, but using it certainly can help you see and catch more.

I will certainly be keeping my account active and using it, at least from time to time. As it adds tools and services aimed at these goals, I’ll begin to use it even more.

All in all, PlagScan held its own in this field and, with a few tweaks, can grow and become a front runner in this field in very short order.

Note: I can provide copies of the test works to all parties if they are interested.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.