Why Web Scraping/Spinning is Back

Spoiler: Google has a role...

February 23, 2011, was a banner day for plagiarism and copyright infringement of blog/news content. It was the day that Google launched a major Panda/Farmer update that sought to reduce the presence of “low quality” content in search results.

Though the change was aimed at so-called “content farms”, sites that would pay human authors small amounts to churn out countless articles of questionable quality, it ultimately hit a variety of other unwanted content types including article spinning, article marketing and web scraping.

Prior to this update, many spammers found a great deal of success by simply taking content they found on other sites and simply uploading it elsewhere. This was done with or without attribution, with or without modification and almost always without permission.

However, after the update, there was a scramble to get away from all forms of questionable content marketing. Other equally questionable tactics rose up from the ashes, but the plague of web scraping was seemingly done as a major concern for sites.

Unfortunately, nine years later (almost to the day), that is seemingly much less true. Now it’s easy to find scraped, plagiarized and otherwise copied articles in search results. To make matters worse, they often rank higher than the original.

So what happened? There doesn’t appear to be a clear answer. What is obvious is that Google (and other search engines) have a serious problem in front of them and the time to address it is now.

The Nature of the Problem

In August, Jesselyn Cook at HuffPost wrote an article about “Bizarre Ripoff ‘News’ Sites” that were ripping off her work. There she provided several examples of her articles appearing on spammy sites with strange alterations to the text.

The alterations often made no sense. For example, “Bill Nye the Science Guy Goes OFF About Climate Change” became “Invoice Nye the Science Man goes OFF About Local Weather Change.”

To those familiar with article spinning, this is a very familiar tale. These sites are clearly using an automated tool to replace words with synonyms. The goal is to create content that appears, to Google at least, to be unique. Whether it’s human-readable is none of the site’s concern as long as they get those Google clicks (and some ad revenue). It’s a tactic that’s been around since at least 2004 and had a heyday during the late 2000s.

However, it isn’t JUST an issue of article spinners doing a good job of fooling the search engines. Much of the actual plagiarism and scraping happens in plain sight.

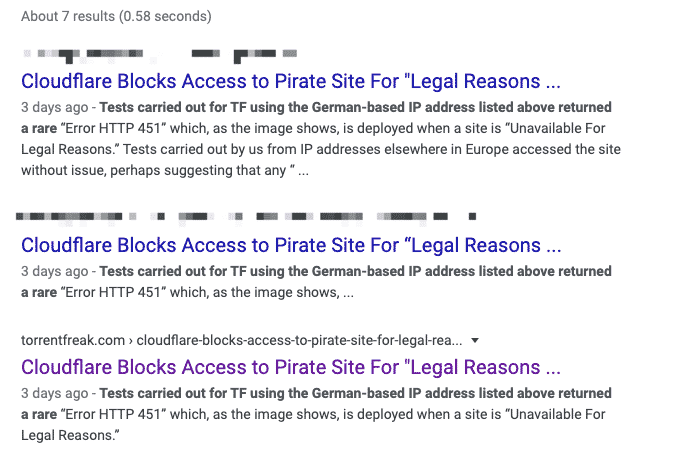

Take, for example, this article by TorrentFreak about Cloudflare’s blocking off a German pirate site. If you search for a passage from it you’ll find not only multiple copies but that several are actually ranking higher. (Note: This is without going into the supplemental results).

Though the sources above do attribute the authors, they do so with a small link in the footnote. Hardly adequate attribution for copying a full article (with images) and not compliant with Torrentfreak’s Creative Commons License.

While it’s unlikely that these sites are harming TorrentFreak in any significant way, it paints a bleak picture. It once again pays to be a web scraper, with or without article spinning or attribution. You may not get rich doing it, but you can certainly traffic to be found.

What Changed?

The big question is “What changed?” Why is it that, after nearly a decade, these antiquated approaches to web spamming are back?

The real answer is that web scraping never really went away. The nature of spamming is that, even after a technique is defeated, people will continue to try it. The reason is fairly simple: Spam is a numbers game and, if you stop a technique 99.9% of the time, a spammer just has to try 1,000 times to have one success (on average).

But that doesn’t explain why many people are noticing more of these sites in their search results, especially when looking for certain kinds of news.

Part of the answer may come from a September announcement by Richard Gingras, Google’s VP for News. There, he talked about efforts they were making to elevate “original reporting” in search results. According to the announcement, Google strongly favored the latest or most comprehensive reporting on a topic. They were going to try and change that algorithm to show more preference to original reporting, keeping those stories toward the top for longer.

Whether that change has materialized is up for debate. I, personally, regularly see duplicative articles rank well both in Google and Google News even today. That said, some of the sites I was monitoring last month when I started researching this topic have disappeared from Google News.

But, whether there’s been a significant change or not, it illustrates the problem. By increasingly favoring “new” content, Google opened a door for these spammers. After all, any scraped, copied or spun version of an original article will appear to be “new” when compared to the original.

Previously, being the first to be seen online with a piece of content was a safeguard against infringers. Google strongly preferred original sources and it showed. However, at least with some news topics, the script has been flipping and “New” gets the nod.

Google may be attempting to dial that back some now, but the damage may already be done for some creators.

That said, this is far from the only reason that this has become a revived issue for some. Google has also been focused on other areas of improving search results. Spammers have evolved and Google did so as well. This might simply be an area that’s gotten less focus in recent months and years.

Regardless, this has become a growing issue, especially for news sites who are not only the most common targets of such scraping but are the most vulnerable under the current algorithms.

Bottom Line

Right now, this is more of an issue to watch than an issue to be overly concerned with. We’re not yet in the heyday of web scraping that was the mid-to-late-2000s. Few sites appear to be severely impacted and most of those are in news categories where timeliness is more important.

However, even those sites probably aren’t feeling much of a pinch yet. This is more of a canary in a coal mine moment that a true alarm.

That said, those that are producing quality work need all of the help they can get and Google letting copycats undermine them just makes things that much more difficult.

However, it goes to show that, while scraping and spinning may seem like they died back in 2011, they are far from in the grave. Those techniques are alive and well, just waiting for Google (or any other search engine) to give it the opportunity it needs to burst through to the forefront.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.