Emma: The Writing Identity and Authorship AI

Though plagiarism detection systems vary in their sophistication, most work on a similar principle: Matching strings of text to other strings of text.

Though plagiarism detection systems vary in their sophistication, most work on a similar principle: Matching strings of text to other strings of text.

The approach has proved fairly effective over the past 20 or so years and much of the technology, especially at the upper echelon of the field, is very impressive. It’s also been a springboard for major advancements such as translated plagiarism detection.

Still, at the end of the day, plagiarism detection tools are looking for text matches and, while that is great for spotting copy and paste plagiarism as well as a great deal of modified plagiarism, it doesn’t get to the core of authorship.

Even after performing a detailed plagiarism analysis it can still be impossible to tell whether or not an author actually wrote the piece they are claiming.

However, Emma Identity (Emma) is hoping to change that.

Emma is a different approach to plagiarism detection in that it doesn’t detect plagiarism at all. Instead, Emma is an AI that aims to detect authorship. It learns the patterns of an author and aims to guess if another piece is or is not written by the same person.

Curious about the idea, I decided to put Emma to the test and take part of its gamification beta.

What I learned is that, while the idea may be interesting, it’s a long way from definitely telling who truly authored a piece.

The Idea Behind Emma

The idea behind Emma is fairly straightforward: People tend to write in largely the same way.

The idea behind Emma is fairly straightforward: People tend to write in largely the same way.

This is something that human beings are are often able to pick up on quickly. For example, if you read a piece by Herman Melville and a piece by Charles Dickens and most would have no trouble distinguishing between the two authors if you were familiar with their writing.

Emma is an attempt to reproduce that intuition through AI. It works by analyzing an author’s writing with some 50 different parameters to determine their writing style and then compare it against unknown works and provide a thumbs up or thumbs down.

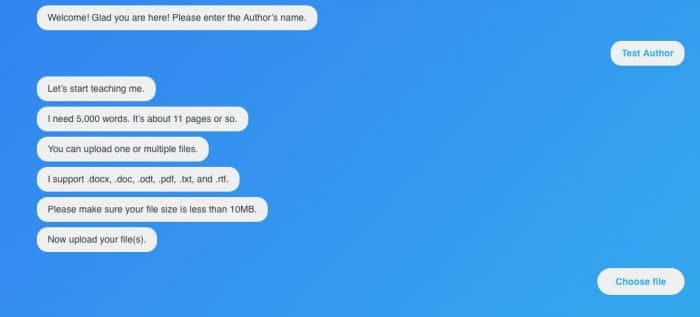

Currently, this is achieved by feeding Emma a large amount of content from the author, about 5,000 words, and then submitting a string of unknown documents to have Emma guess the authorship. The idea is that you feed the AI enough information to learn the author’s style it can then guess whether a new work was created by them.

Ideally, the creators of Emma would like this to be useful for a variety of purposes including letting educators spot plagiarism, allowing lawyers to prove authorship and even enable law enforcement to spot authors of blackmail notes.

However, my brief experiment found that, while impressive, the technology was far from definitive.

My Test

To test Emma, I decided to use myself as the subject. Since I have many thousands of words of my own writing in my Google Docs, it was an easy source for me to pull from.

When I first signed into to Emma, it asked me to first feed it 5,000 words of my writing. I did this by providing it first a lengthy diary entry that’s still in progress and a series of shorter articles I’ve written on plagiarism and copyright issues.

After I reached the requisite 5,000 words, Emma said it was ready to start analyzing unknown works.

At first I fed it two other articles that I had written, in both cases it came back saying that they were mine. In both cases, Emma was startling certain of my authorship, giving me 97 and 100 percent certainty on them respectively.

It was then I decided to throw Emma some curveballs. I first fed it a non-fiction story where I recalled something from my distant past (though it was written recently). In that case, Emma reported it was not likely mine. I then did the same with a recent case study I had written for a client and, once again, Emma did not recognize it as my own.

After analyzing 5,000 words of my work and four additional documents, Emma was 2-2.

I then fed Emma 2 more documents, neither written by me. The first was a blog post from my partner and the second was a blog post from another copyright blogger. In both cases Emma correctly recognized that they were not from me, bringing the final score to 4-2.

Though this is an admittedly limited sample size, it does point to some pretty significant limitations of Emma.

My Impressions on Emma

To be clear, Emma’s technology is very impressive. Authorship is one of the toughest things for an AI to crack and to have any success here is worth applauding.

According to Emma’s creators, the AI has approximately an 85% success rate in spotting authorship and that largely falls in line with my experience. Even if my percentage was lower, with a larger sample size I have no doubt it would have ended up in that range.

Still, that doesn’t mean that Emma is ready to start assisting the FBI. This is technology that is very much in beta (which is what this test is considered) and it clearly has a long way to go.

The biggest problem Emma had, in my limited testing, wasn’t false positives but false negatives. Emma never attributed another person’s writing to mine, but twice told me I hadn’t written something I had.

That seems to stem from Emma not being very good at identifying when authors change voice. The writing I do in my personal journal is different from what I do in articles, which is different from case studies. Though they are all still very much me, they’re different styles of writing with different purposes.

It’s a bit like fooling facial recognition software with a pair of glasses or by growing a beard. It’s understandable why it might struggle, but the person in the image is still me and the AI is still wrong.

So while the issues are completely understandable, these are still obstacles that it has to overcome if Emma hopes to achieve its goal.

Bottom Line

Right now, Emma is a long way off from its goal. I can’t imagine using Emma in a plagiarism investigation, much less a law enforcement one, at this time. The accuracy just isn’t there.

However, that’s not to say that it can’t or won’t be there in the future. Emma is in beta and there is still work going on behind the scenes.

Still, honing to where it truly is useful is going to be a mammoth task. Authorship is a very complex issue and, while it is true that people do tend to write in a similar fashion, they can also write very differently day to day.

While Emma seems to have a reasonable handle on how different people write, it doesn’t quite grasp how a single author can write differently in various situations. Detecting when an author changes style or intention is going to be a very difficult task for Emma to crack.

Still, the technology is impressive and fun. Furthermore, the makers of Emma were right to gamify the beta as that is what it’s best suited for right now: An entertaining game.

Though it may become something usable for more serious purposes later, it’s not there today nor are the creators claiming it is.

But that’s not to say we won’t be having a very different conversation in a few years…

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.