Understanding the Turnitin/Wikipedia Collaboration

In late October, Turnitin announced that they had partnered with Wikipedia to provide plagiarism and copyright infringement-detection services for all of the articles in the English Wikipedia.

In late October, Turnitin announced that they had partnered with Wikipedia to provide plagiarism and copyright infringement-detection services for all of the articles in the English Wikipedia.

However, the details of how the arrangement works and why it’s important for Wikipedia takes a bit of explaining. The reason is because the arrangement has literally been years in the making and fits into a complex process at Wikipedia that involves both human editors and Wikipedia’s own bots.

But through the layers comes a process that, hopefully, will mean that Wikipedia has less copyright infringing and plagiarized content on its site as instances of copied text are detected as they happen, making editors aware of potential problems immediately and enabling them to take action swiftly.

This should mean fewer copyright issues for the site, both in terms of copyright notices Wikipedia receives, but also help with licensing the encyclopedia itself. After all, licensing an encyclopedia can be tricky unless you’re sure everything within it is original

But beyond the copyright issues, this move should also lead better quality writing and research on Wikipedia due to improved citation and originality standards.

How the System Works

Cooperation between Turnitin and Wikipedia had been under consideration for years. In 2012, Wikipedia posted a request for comment (RfC) outlining a possible collaboration between the two.

Cooperation between Turnitin and Wikipedia had been under consideration for years. In 2012, Wikipedia posted a request for comment (RfC) outlining a possible collaboration between the two.

However, that collaboration began in ernest in 2014 with the launch of Eranbot.

Eranbot was conceived by James Hellman, who goes by the username Doc James on Wikipedia, and then developed by Eran Rosenthal, from whom the bot got its name. The idea was to create a bot that could automate much of the copied text detection, with an aim to reduce both copyright infringement and plagiarism.

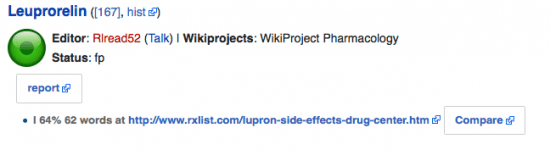

Eranbot looks for new edits to Wikipedia and, for edits that are above a certain length, submits the edits to iThenticate, a plagiarism detection tool created by Turnitin.

iThenticate then checks the text for duplication elsewhere on the web. If it’s found on a Wikipedia mirror site or the link is broken, iThenticate removes the link. If other matching text remains and is above a certain quantity, the amount of which has not been made public, it will then be put on a copyright report page for analysis by a Wikipedia editor.

The system was actually first tested in August 2014, when Wikipedia used it on all of the medical articles in its database. After a successful run, in April of 2015 it was expanded to the whole of the English Wikipedia.

You can view the results form Eranbot on the Copyright page, which seems to be generating dozens of results each day for investigation by an editor.

Those editors can then either mark the passage as being a true positive (TP) or a false positive (FP). In cases of true positives, the editor is tasked with making the needed changes to the work to ensure copyright and ethical compliance. Whether that means adding attribution, removing the text or rewriting it. For false positives, no action is needed.

The editor also has the ability to indicate whether citation is present or needed, whether the site involved is a Wikipedia mirror (and thus needs to be added to the list) or if it’s from an open-licensed source that permits the use of the text.

However, it’s important to note that Eranbot nor iThenticate can or will edit an article directly. All they can do is spot suspicious text and flag it for an editor to review. The only changes to the articles are by the humans.

In short, Eranbot is a tool for editors, not a replacement for them.

An Interesting Workflow

I’ve worked with companies that have either implemented or are implementing plagiarism detection strategies for content they create. While every organization has a different strategy, they all have the same few steps:

- Content is Automatically Checked: Using a chosen criteria, content submitted is automatically checked for plagiarism.

- Suspicious Content is Flagged: Suspicious content is then flagged. Once again, the criteria is different in each case, but there is almost always an automated test for what warranted further attention and what is ignored.

- Suspicious Content is Evaluated: Once the suspicious content is flagged, it’s then passed on to humans who evaluate it, determine if it’s a false positive and what needs to be done with the content if it isn’t.

In that regard, Wikipedia has pieced together a very similar workflow. One similar to what I would expect to see at a news site or a publisher. However, what’s different is that, like everything else on Wikipedia, it’s done through volunteers. Everyone, including the developers of Eranbot, are volunteering for this.

To me, the most interesting thing about Wikipedia has always been its ability to bring some semblance of order out of what seems like it should be chaos, something it does well here too.

Bottom Line

Whether the Wikipedia/Turnitin approach should be a model for other companies depends on the needs of those companies. However, for the massive amount of content that’s put on Wikipedia every day, the site has found a decent way to balance preventing copyright infringements from appearing on the site and not inundating editors with suspicious passages.

However, if the system does wind up struggling, it will likely be the human element that falls apart. If the human editors can’t keep up with the incoming matches, then either Wikipedia will have to adjust the sensitivity of the system or risk a backlog that overtaxes editors and still keeps infringing text on the site.

In the end, if the human and technology elements can work together, this could do a great deal to help keep infringing and plagiarized text off of Wikipedia. While it won’t address any infringements from the past, it should at least reduce the ones in the future.

Disclosure: I am a paid consultant and blogger for iThenticate and Turnitin.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.