New Turnitin Study on the Impact of Plagiarism Detection in Higher Education

Back in September, Turnitin, the company behind the widely-used plagiarism detection tool of the same name, released a study that took a look at the impact using plagiarism detection tools had on higher education.

Back in September, Turnitin, the company behind the widely-used plagiarism detection tool of the same name, released a study that took a look at the impact using plagiarism detection tools had on higher education.

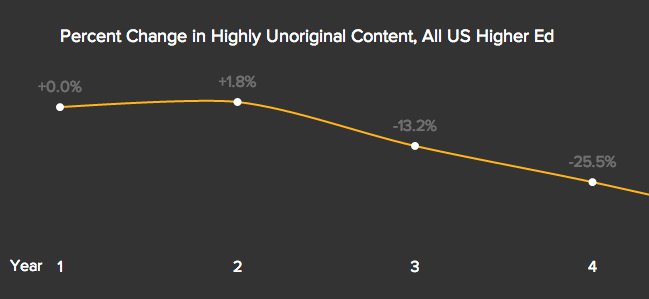

The results were pretty striking. Over a period of five years, schools saw an average decrease in incidents of likely plagiarism (papers with more than 50% unoriginal content) of 33.4%. All totaled, 43 of the 50 states had a reduction in plagiarism and, after a small increase in the second year (the first after the baseline year), schools, on average, saw a year-over-year decrease in likely plagiarism with every year of use.

Now Turnitin is doing the same thing for higher education, looking at some 1003 colleges that have been with the service since before January 1, 2011. Once again, they looked at the number of papers that had come back as 50-100 percent matched content, meaning that over half of the paper was unoriginal (though not necessarily plagiarized).

The results in higher education were remarkably consistent with with the results in the high school study, showing an overall decrease in the amount of suspect works detected, one that averages out to 39.1% over the course of five years for higher education, but it also contains some new information that is interesting and may shed some light on how to better use plagiarism detection tools in the classroom.

Note: Since this study is so similar to the first one, I’m going to follow the same pattern in breaking it down.

The Basics of The New Study

The new study, for the most part, followed the pattern of the high school one. Turnitin looked at schools that had been with them for at least three years. After using the first full year of usage as a baseline, a full year of usage being one that both:

The new study, for the most part, followed the pattern of the high school one. Turnitin looked at schools that had been with them for at least three years. After using the first full year of usage as a baseline, a full year of usage being one that both:

- The institution use Turnitin for the full calendar year.

- Reflects at least 10% of the total lifetime submissions.

From there, Turnitin looked at the number of works with more than 50% unoriginal content and then compared that with the total number of works submitted to obtain a percentage of highly unoriginal works. From there, Turnitin tracked how that percentage changed year after year.

However, where the high school study broke apart its findings by state, Turnitin broke apart the higher education one by institution type, separating out 2-year and 4-year schools and then further breaking them down by size, ranging from schools with less than 1,000 students to those with more than 10,000.

This resulted in 12 different categories of schools, ranging in size from 37 schools to 122.

However, regardless of size or type, all categories saw a drop in the amount of unoriginal content detected over the course of five years. Though that drop varied based on school size, ranging from 19.1% for small four-year schools to 77.9% for mid-sized two-year colleges, no category saw an overall increase in either the fourth or fifth year.

Combined, the schools saw an average reduction in unoriginal work of 39.1% over the course of the five years.

Concerns and Limitations

The concerns and limitations of this study are similar to the first one. So feel free to revisit the previous article for more detail.

On that note, here are the key concerns and limitations with the study:

- Potential Bias: The study is promotional in nature and the data comes from the company selling the product. While it’s hard to imagine the data coming from anywhere else, the questions are inevitable.

- Incomplete Data: All the study presents are the percentages and not the raw numbers. While that may be good for client privacy, it hinders some of the analysis. Likewise, there’s no control group to compare against for schools that don’t use detection tools.

- Only Counts What Turnitin Sees: The reduction only proves that Turnitin is spotting less unoriginal content, not that there isn’t plagiarism or cheating, just that it’s detecting less unoriginality.

Still, as I said in the earlier article, the data is still interesting and useful despite its limitations.

Since tricks to beat Turnitin are rare and squashed quickly, it’s unlikely that the drop in percentage represents students becoming smarter plagiarists, at least not of the copy and paste variety. Students may still be cheating and taking other shortcuts, but turning in unoriginal work verbatim is definitely happening less often.

All of that said, it’s important to keep those limitations in mind as we look deeper into the data and try to draw some conclusions.

Lessons and Takeaways

For school considering using plagiarism detection tools or simply those interested in the application of such software, there are a few key takeaways that come out of the data:

- Remarkable Similarity to High School Data: The results of the higher education study mirrors the high school one almost perfectly. Not only were the total reductions similar, but the pattern was the same, including a slight increase in the second year of use. This indicates that the results are not an anomaly to one or the other.

- 2-Year Schools Saw Greater Reductions: Though all schools saw a reduction in detected unoriginal writing, 2-year schools, overall, did better than larger ones. In total, 2-year colleges saw an average reduction of 59.3% where 4-year schools saw a reduction of 31.8%. (Note: Four year schools made up nearly 73% of all schools studied.)

- School Size Was Largely Irrelevant: But while 2-year schools saw greater reductions than 4-year, there was no consistent pattern among school sizes. Though there were noticeable differences in the amount of reduction between sizes, there was no clear pattern.

The difference between two and four-year schools might be explained by the fact two-year schools tend to be less competitive and are likely taking in weaker writers. Those writers may be more prone to unoriginal writing of all kinds and plagiarism detection software, when used correctly, can be a great teaching tool for reducing that problem.

However, it’s the similarities between the high school and higher education studies that I find truly amazing. The graphs, while not identical, follow a close pattern and show, across educational institutions, plagiarism detection software is useful if you make a long-term commitment to it. It also shows that a short term rise in unoriginal work is likely in the second full year of use, but that after that bump, things start trending down quickly.

In short, the two results go a long way to validate each other and show that plagiarism detection tools, when used appropriately, can and likely will achieve the desired results over time.

Bottom Line

As with the first study, this is an interesting look at how plagiarism detection tools can impact the production of unoriginal content. While the study has its flaws and isn’t perfect, it still makes a strong case for the inclusion of plagiarism detection tools in the classroom, in particular as a part of robust education about citation, paraphrasing and proper academic writing.

To be clear, Turnitin, nor any plagiarism detection service, is a magic bullet for ending unoriginal writing. While the reduction in unoriginal content is impressive, it did not bring a stop to it. Even after five years, students continued to submit unoriginal works that were easily detected by Turnitin.

Still, if used as part of the curriculum and as a teaching tool, plagiarism detection software can do more than catch cheaters, it can be used to help instruct students how to write original work and, along the way, produce better papers.

That can only be a good thing for educators and students alike.

Disclosure: I am a paid consultant for iThenticate, a company that is owned by iParadigms, which also own Turnitin.

Want to Reuse or Republish this Content?

If you want to feature this article in your site, classroom or elsewhere, just let us know! We usually grant permission within 24 hours.